JUST KNOW! Why Lstm Is Better Than Rnn

CNN and RNN have different hyperparameters filter dimension number of filters hidden state dimension etc. ANN is considered to be less powerful than CNN RNN.

Difference Between Feedback Rnn And Lstm Gru Cross Validated

As my advisor put it over one of our chats Basically the Kalman filter and in some sense the HMM is to the RNN what the linear classifier is.

Why lstm is better than rnn. The biggest problem with RNN in general including LSTM is that they are hard to train due to gradient exploration and gradient vanishing problem. LSTMs or GRUs are computationally more effective than the standard RNNs because they explicitly attempt to address the vanishing and exploding gradient problems which are numerical problems related to the vanishing or explosion of the values of the gradient vector the vector that contains the partial derivatives of the loss function with respect to the parameters of the model that. CNNs tend to be much faster 5 times faster than RNN.

Its hard to draw fair comparisons. CNN is considered to be more powerful than ANN RNN. Summation of all three networks in single table.

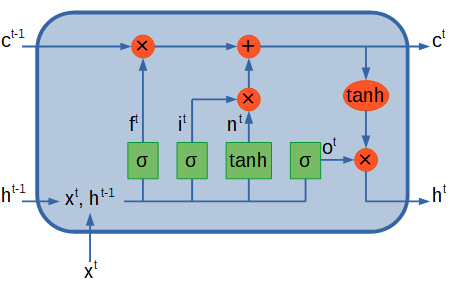

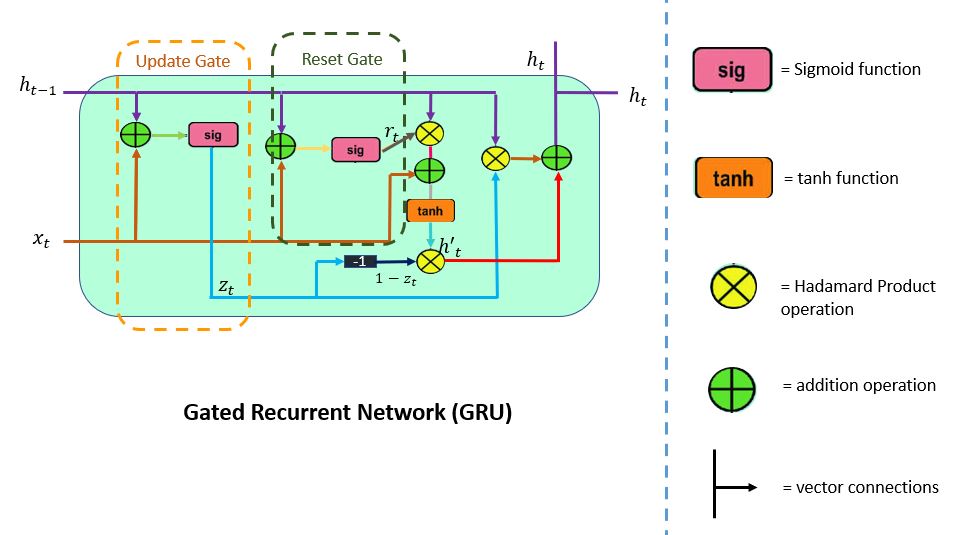

RNN includes less feature compatibility when compared to CNN. To sum up GRU outperformed traditional RNN. Thats why tanh is used to determine candidate values to get added to the internal state.

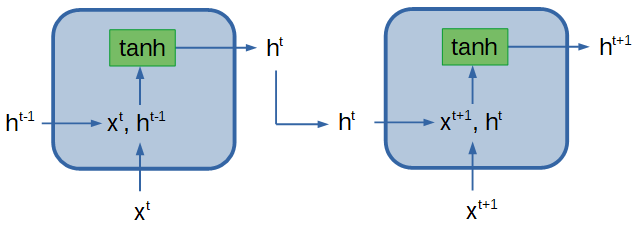

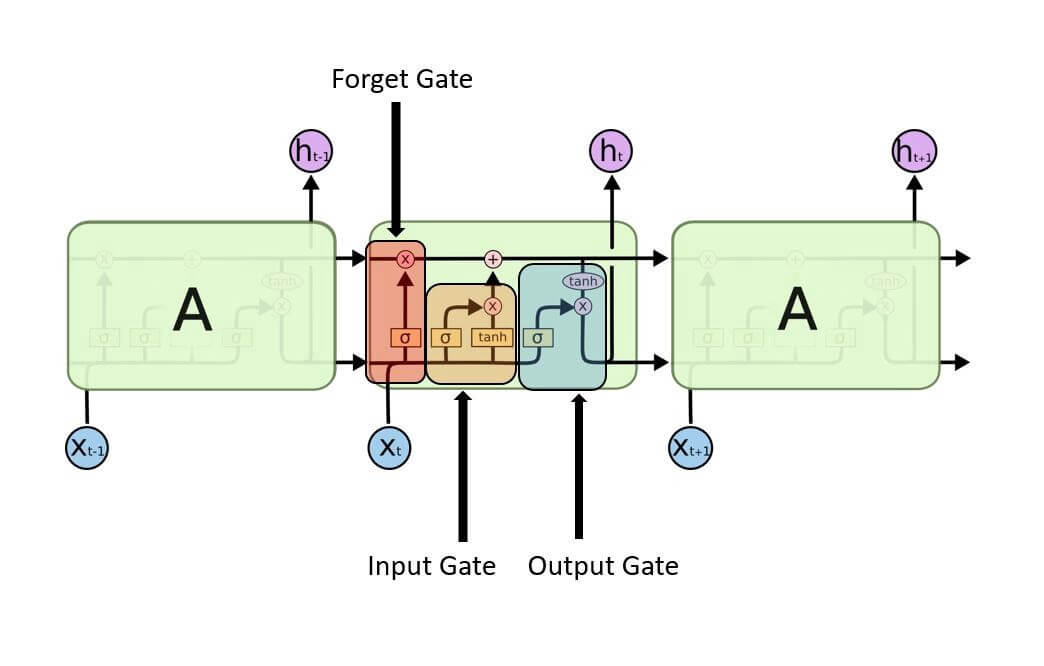

If you compare the results with LSTM GRU has used fewer tensor operations. We can say that when we move from RNN to LSTM Long Short-Term Memory we are introducing more more controlling knobs which control the flow and mixing of Inputs as per trained Weights. Information in RNN and LSTM are retained thanks to previously computed hidden states.

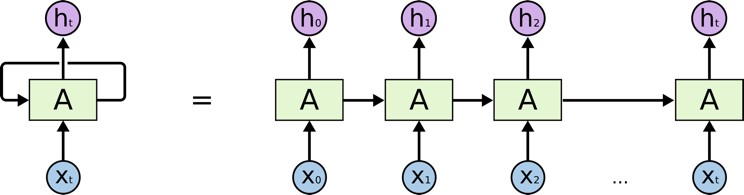

Input with spatial structure like images cannot be modeled easily with the standard Vanilla LSTM. As Support Vector Machine SVM and deep learning algorithms such as Long Short-Term Memory LSTM. The point is that the encoding of a specific word is retained only for the next time step which means that the encoding of a word strongly affect only the representation of the next word its influence is quickly lost after few time steps.

Which one is better Lstm or GRU. Unidirectional LSTM only preserves information of the pastbecause the only inputs it has seen are from the past. The goal is to find whether the conventional way of performing the regression task with SVM holds good for stock market prediction or whether the newer concepts like.

Check out the diagrams and explanations in Chris Olahs Understanding LSTM Networks for more. So LSTM gives us the most Control-ability and thus Better Results. The key difference between GRU and LSTM is that GRUs bag has two gates that are reset and update while LSTM has three gates that are input output forget.

For example in text use categorical time series of words or characters CNNs are better at some things while LSTMs are better at others. GRU is less complex than LSTM because it has less number of gates. The CNN Long Short-Term Memory Network or CNN LSTM for short is an LSTM architecture specifically designed for sequence prediction problems with spatial inputs like images or videos.

Using bidirectional will run your inputs in two ways one from past to future and one from future to past and what differs this approach from unidirectional is that in the. And theoretically RNNs including LSTMs of course can process varying-length series straightforwardly while CNNs or other NNs need some work. Facial recognition and Computer vision.

The practical limit for LSTM seems to be around 200 steps with standard gradient descent and random initialization. To summarize the linearity of the model makes the Kalman filter and HMMs a highly constrained model as compared to RNNs. However with enough data youll get an RNN or LSTM which matches the nonlinearities of your real data well which in tern makes its predictions much more accurate than the ARMAARIMA.

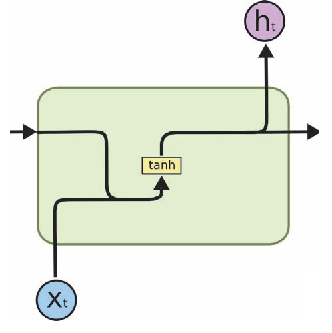

I have done some projects on text classification and relation extraction using CNN and RNN specifically LSTM and GRU. Long Short-Term Memory LSTM or RNN models are sequential and need to be processed in order unlike transformer models. The LSTM is a specific implementation of an RNN that introduces a more complex neuron which includes a forget gate.

LSTMs Long Short Term Memory deal with these problems by introducing new gates such as input and forget gates which allow for a better control over the gradient flow and enable better preservation of long-range dependencies. The long range dependency in RNN is resolved by increasing the number of repeating layer in LSTM. There isnt a clear answer which variant performed better.

If the dataset is small then GRU is preferred otherwise LSTM for the larger dataset. LSTM in its core preserves information from inputs that has already passed through it using the hidden state. The results of the two however are almost the same.

It takes less time to train. Usually you can try both algorithms and conclude which one works better. The GRU cousin of the LSTM doesnt have a second tanh so in a sense the second one is not necessary.

You can learn more about the. Gentle introduction to CNN LSTM recurrent neural networks with example Python code. Due to the parallelization ability of the transformer mechanism much more data can be processed in the same amount of time with transformer models.

Facial recognition text digitization and Natural language processing.

How The Lstm Improves The Rnn Understand The Differences Between By Tiago Miguel Towards Data Science

Simple Rnn Vs Gru Vs Lstm Difference Lies In More Flexible Control By Saurabh Rathor Medium

Long Short Term Memory From Zero To Hero With Pytorch

The General Schema Of A Rnn Unit Versus A Lstm One Adapted From Olah 2015 Download Scientific Diagram

Lstm Versus Gru Units In Rnn Pluralsight

Difference Between Feedback Rnn And Lstm Gru Cross Validated

How The Lstm Improves The Rnn Understand The Differences Between By Tiago Miguel Towards Data Science

Rnn Vs Gru Vs Lstm In This Post I Will Make You Go By Hemanth Pedamallu Analytics Vidhya Medium

Understanding Lstm And Its Quick Implementation In Keras For Sentiment Analysis By Nimesh Sinha Towards Data Science

Lstm Rnn In Tensorflow Javatpoint

Difference Between Feedback Rnn And Lstm Gru Cross Validated

Lstm Vs Gru In Recurrent Neural Network A Comparative Study Analytics India Magazine

Rnn V S Lstm A Rnns Use Their Internal State Memory To Process Download Scientific Diagram

Comparison Between Rnn Lstm And Esn The Red Lines Are Adaptable And Download Scientific Diagram

What Is The Main Difference Between Rnn And Lstm Nlp Rnn Vs Lstm Data Science Duniya

Rnn Vs Lstm Data Science Duniya

Rnn And Lstm Comparison Chart Download Scientific Diagram

What Is The Main Difference Between Rnn And Lstm Nlp Rnn Vs Lstm Data Science Duniya

Simple Rnn Vs Gru Vs Lstm Difference Lies In More Flexible Control By Saurabh Rathor Medium

Comments

Post a Comment